Porting Cave Story to a Sun Ultra 1 Workstation

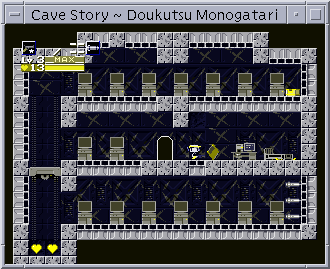

For the unaware, Cave Story is a platforming Metroidvania released by Daisuke Amaya in 2004. Besides being a fun game, it has several qualities that made porting it to a Sun Ultra 1 seem like a good idea. It’s a strictly 2D game, it can natively run at low resolutions (down to 320x240), there’s a high quality decompilation availble in the form of CSE2, and it came out in 2004 optimized for low end machines of the era. Surely a professional workstation machine from 1995 should be able to run it with ease.

Initial work

To avoid boxing myself in with regards to optimization, I decided to forgo the very convenient backends offered by CSE2’s portable branch. One of the two SDL backends may have seemed like a natural choice since at least one of them does ostensibly support old Solaris, but my ability to optimize them and fix things inside goes down dramatically when I don’t already understand the internals of the library, for obvious reasons. I also felt that despite my vague and uneducated dislike of X11, it seemed like something I should know how to program for, just as a general knowledge kind of thing. So I got started writing a raw X11 backend for CSE2.

Adding a new backend isn’t particularly hard, the backend system is quite well made and makes additions easy. The backends themselves are also relatively simple, and so creating a new one isn’t all that much work, assuming you start from the right place. The backend system is structured like this:

- Renderer

- (various renderers including Software)

- Window

- (…)

- Software

- (various backends for displaying the software renderer’s pictures)

- Audio

- (various audio mixers including SoftwareMixer)

- SoftwareMixer

- (various backends for playing back SoftwareMixer’s samples)

- Platform

- (various backends for setting up and dealing with platform specifics)

- Controller

- (various backe—who cares, I’m not hooking up a gamepad to a Sun workstation)

These form a fairly simple interface to the game, which was

originally built around DirectX. Given that I have zero faith in this

mid-90s workstation’s 3D-acceleration capabilities, I went with the

software renderer. It fairly straightforwardly takes in graphics from

the game via the RenderBackend_UploadSurface function and

stores it away, and has RenderBackend_Blit draw it to the

screen one byte at a time. Transparency exists in the form of completely

black pixels in the texture, and handling transparency is enabled via a

boolean parameter to the blit function. When in the non-transparent

mode, lines are drawn via memcpy rather than a byte-by-byte

loop. The renderer also does some less interesting things like drawing

solid colors and small bitmap fonts.

The first part of the backend I actually touched was the “window” part, which provides the rendering system with a framebuffer and opens a window for it on platforms where that’s appropriate. The other backend part I had to touch upon getting started was “platform”. A platform backend’s main jobs are taking keyboard input and keeping track of time. It’s also a convenient place to do setup prior to actually opening a window.

Let’s go over a few of the functions a window backend needs to provide:

WindowBackend_Software_CreateWindowtakes in a title and window dimensions and makes a window exist somewhere.WindowBackend_Software_GetFramebuffertakes a pointer to asize_t, sets it to the framebuffer’spitch , then returns the framebuffer.WindowBackend_Software_Displayis called when the renderer is done drawing and the picture needs to go on the screen.

Apart from confusion caused by X11’s lack of appropriate

documentation (at least appropriate to my attention span) it was quite

easy to open up a window. You open the default display, you ask it for

its default screen, and you use the display and screen in a call to

XCreateSimpleWindow. Well, no, you’re not done yet. You

also have to map the window, which means telling the X server to display

it. And one more thing, you have to do some magic with

"WM_DELETE_WINDOW" and XSetWMProtocols before

the window actually shows up, though this might only be on modern

systems? I initially developed the X11 backend on my modern Linux

computer since accessing the target machine required a 43

Providing a framebuffer required first allocating an appropriately

sized buffer that the game can draw into, then another one that X can

use to draw on the screen. These are different pixel formats. Cave Story

likes to work with pixels in the RGB format, whereas X prefers BGRX,

where X is discarded padding. I converted between these in the

WindowBackend_Software_Display function. This was done

rather poorly and inefficiently. Finally, we can XPutImage

the image to the window and watch it appear. An XImage is

backed by an in-memory framebuffer, so drawing to it is easy, we just

convert the software renderer’s framebuffer to X’s format with the

renderer’s framebuffer as the source and the XImage’s

buffer as the destination, and then just XPutImage.

At this point I was working on a modern Linux computer since I don’t always have access to our target machine, and the target machine also struggles with Git for reasons that aren’t easy to solve.

But on this modern Linux machine, this code seemed to work quite well. The game ran in its (very) little window at the right speed, which strangely enough is 50 FPS. Yes. This Japanese PC game from 2004 is designed to run at 50 FPS. No, I did not make a mistake, you did not read that wrong. Strange framerate aside, nothing was wrong and I was feeling quite proud of myself. Then came the real hardware testing.

The first test

Getting the game running on the actual Sun Ultra was a challenge in and of itself. Because it felt like it would take forever, and because I was still afraid of the file system collapsing and/or being completely filled up again (be thankful you’re not using Solaris 2.6’s UFS), I started by not actually running it on the Sun. Instead, I used X for what it was ostensibly designed for. I sent the game’s graphics over the network like an insane person.

After a little wrestling with X’s authorization magic, we were able

to get the old classic xeyes running on my laptop with its

window on the Sun, and then we tried Cave Story. Unfortunately the image

was extremely wrong. There was movement, but no recognizable shapes.

After some thinking and xdpyinfoing we found out that

the computer was running in an 8-bit color mode which obviously didn’t

match the backend’s pixel format of four bytes per pixel. So, we told

the game to use what’s called an XVisual with 24-bit color

and that gave us a crash. Turns out that Sun’s version of X11 can’t just

switch video modes when it’s needed, and runs in the least flexible mode

by default. So after some reading that was not at all fun, I figured out

how to X make start with a different color mode. There’s instructions

for doing that in the appendix and Git repo.

Starting the game now, it… works, but the colors are wrong. In fact,

in

After copying over the source tree to the machine, I realized I would

need some way to build it. Sun did not ship CMake, that’s for sure.

Unfortunately the latest CMake binary we could find for the Sun was a

whole major version and a bit behind where it needed to be. So instead,

I mocked up a quick script in my language of choice (which I will not be

telling you about for fear of coming across as a preacher) which

generated a long but simple shell script that just ran g++

over and over on just the files I needed. This was eventually replaced

with a partially hand-written Makefile.

So when I first compiled there were some minor problems with the one

mandatory dependency, stb_image. The first problem was that

apparently stdint.h, which provides sane and consistent

names for integer data types, does not exist on the Sun. Evidently this

was a newer addition to C. Luckily, faking it was an option.

#pragma once

typedef unsigned short uint16_t;

typedef short int16_t;

typedef unsigned int uint32_t;

typedef int int32_t;That’s it, that fixed that. The second problem was that

stb_image was trying to use thread-local variables, which

Solaris 2.6 and/or this version of GCC apparently doesn’t support. This

was fixed by adding #define STBI_NO_THREAD_LOCALS right

before the point where stb_image.h is included. This was

enough to get the game to compile. At this point, I was expecting to see

the game run at full speed, or at least pretty close, instead I got–

wait…

ld.so.1: ./CSE2: fatal: libstdc++.so.6: open failed: No such file or directory

Oh, right… LD_LIBRARY_PATH=/usr/tgcware/lib was

necessary so that the dynamic linker can find our C++ standard library.

I bet there’s a better way, but this was deemed good enough. Now! I

expected a pretty good speed, instead I got… roughly 30 FPS, sometimes

higher, usually a bit lower. Like 28.

Now that’s not unworkable, there’s several points we could improve

upon. But this is not playable. And this was with GCC’s -O2

optimization option turned on. -O3 was later tested but I

chose not to trust its stability and stuck to -O2.

Initial Optimization

The first idea was to skip performing the full screen format

conversion by modifying the software renderer to work directly in X’s

prefered color format. This required quite a few changes, since the

software renderer draws pixels in quite a few places. First up the

framebuffer size and pitch given to the software renderer by the window

backend needed to be adjusted to fit the extra dead byte per pixel. Then

the several drawing functions were hacked to simply do an extra byte.

RenderBackend_Blit’s transparency path got a pair of extra

increments per pixel and its opaque path had its straight line

memcpy adjusted.

I also altered the RenderBackend_UploadSurface function

to convert its input to the 4-byte pixel format so that during the

actual rendering process we’ll never have to touch the 3-byte format.

The full screen conversion code was removed, and the new version tested.

It got… roughly 30 FPS. Sometimes lower, usually a bit higher. Like

32.

Another flaw with this version was that it more or less reverted the

color fix, since I was now working on my laptop again and hadn’t

bothered to add any kind of endianess #ifdefs.

XShm: Making X programs realize it’s not 1987

The X Window System was originally designed to be able to transparently run on a different machine than the program in need of graphics. This has a great deal of benefits, but graphical performance is not one. As it turns out, we’ve been sending our whole framebuffer over a socket every single frame. After friend Hugo sent me a PDF containing vague information about various Sun video cards, I discovered that our video card had deprecated something called “Direct Xlib”. Even disregarding the name sounding a little familiar, this caused me to cease up with excitement. As my eyes flew across the screen, I could feel all 50 frames per second making themselves viable.

“Direct Xlib is not supported on the Creator accelerator. The X shared memory transport feature (new in Solaris 2.5) should be used instead.”

A quick search later, I had the knowledge to make what was to become the most important optimization making full speed gameplay viable.

The X Shared Memory Extension (or XShm for short) allows the X server

and client to share memory buffers, which can be used to hold, say, an

image. The extension is not entirely X’s creation, but instead works

with part of the UNIX System V standard (check out

man shmget). Using this functionality, we don’t need to

send the image across the network, both processes just already have it.

While writing the code that would use this, I discovered that Solaris

2.6 has a very low default limit on how big a shared memory buffer can

get. Luckily, although this was slightly smaller than a 640x480

framebuffer, it was significantly larger than two 320x240 framebuffers,

so there’s room for our framebuffer and plenty more to spare.

The actual process of setting up the shared memory buffers is a

little clunky and involves some very UNIX function names,

shmget and shmat, for getting an ID and

process-local address respectively. We then have to bind these shared

buffers to images. There were some problems initially, but eventually I

realized that the resolution was set too high on the Sun. I had

apparently been testing with a 640x480 config file from my laptop. I

really should have been checking errno, but in my defense,

checking errno correctly and thoroughly is annoying.

So, how’s the performance? Roughly 50 FPS! Sometimes lower, usually locked at 50. Which if you remember, is the correct but very odd framerate. Occasionally the framerate counter would show 51 for a second, sometimes it would show 47 during heavy scenes. It’s not the ideal way to play, but it certainly runs!

Although… occasionally the screen would flash, showing an unfinished frame underneath. As it turns out, the X server draws directly from the buffer its given, exactly as promised. But that’s a problem when the game starts drawing the next frame before the X server has finished putting the last one up. This could be solved by having double buffering, since the renderer does provide the window backend a means of switching out the buffer every frame, but at this point I felt like that could be solved later, and moved on.

Beginning the great odyssey of hearing things

Now that the game played more or less fine I turned my attention to

sound. I really wanted the sound to work since Cave Story can’t really

be called a complete experience without its excellent soundtrack. Sound

on this era of Solaris was very simple, but also quite unpleasant.

Instead of a proper API that can invoke a callback to fetch samples,

it’s the program’s job to feed the audio buffer via a device file,

/dev/audio. The game is not particularly friendly to this

approach. It expects, quite reasonably, that knowing when to produce

more sound is someone else’s problem, and simply provides the audio

backend with a means of getting what it needs. Unfortunately, by failing

to provide a sufficient audio API, Sun has made this my problem.

At first it sounds difficult to reconcile the needs of a dumb pipe

with the needs of the game, but luckily it’s not as bare an interface as

I may first have made it sound. There is some limited metadata which can

be fetched. ioctl, everybody’s favorite system

call*,

can be called with a magic constant to get and set some basic

information about the audio system’s state via a struct. Things like,

“how many samples have you received?”, “any errors?”, and “how many

zero-length buffers (end-of-file records) have you processed?”.

Now I’ll admit, an “end-of-file record” was not something I had heard of before. A quick internet search later I felt quite validated, since the internet seemingly hadn’t heard of it either outside of outdated versions of FORTRAN (yes, there are allegedly non-outdated versions of FORTRAN), and they didn’t seem to match the description of “zero-length buffer”. Naturally, I completely ignored this and assumed that things would work out fine anyway.

The first idea I had for structuring the audio backend was to have a

secondary thread for audio and have it go as hard as it could. It would

check how many of those end-of-file records the audio system had

processed, wait until that number increased, store the new number, feed

/dev/audio another mouthful of audio, and delay using the

usleep function for as long as it seemed like it would take

to play that audio. Then it would repeat, see if it had played it,

etc.

Things did not work out fine

The speaker remained dead silent. I tried quite a few things, and got plenty of suggestions from others on what to try, but couldn’t get the game to give it any sound at all. I assumed at this point that threading problems might be at fault, so I decided to take care of that.

To ensure there wouldn’t be race conditions with the main game thread, I made the audio thread pause the game thread while performing audio work. This makes sense to do since the normal expectation is that the game thread itself will be interrupted to fetch audio.

I tried implementing something like this and had bad results. And by “bad results” I mean that it crashed the whole process when the game thread received its pause signal. Even though I properly set up a handler for it. At this point I returned to optimizing the graphics. That felt especially pressing since my non-working audio tests had slowed the game down considerably, specifically due to the audio rendering parts. The screen flashing was also getting pretty bad.

Drawing completely transparent empty tiles considered harmful

I was sitting at work (internship but who’s really counting) with no tasks lined up, and I decided I might as well optimize the rendering code (this was many a moon ago, I no longer work there). I knew from looking at the sound code that sound would chew through CPU time like a dog through an expensive leather couch you’re holding onto for your rich uncle, so I felt I’d better prepare for that. I had recently watched an old marketing video for the Symbolics Joshua expert system, which was the big thing in 80s AI research/hype (it is no longer considered AI because we understand its limits and the hype died). At one point, the host Steve Rowley says:

“Whenever a program attempts a large number of anything and only two percent work, that should wave a little red flag in your head that says some pretty serious efficiency gains can be had.”

Now, this should be obvious to anyone who isn’t a prompt engineer,

and admittedly the video was made to be somewhat boss-friendly. This was

certainly obvious to me at least. But him saying it out loud had my

brain automatically trying to find an example of where I had personally

seen this, and I immediately went to CSE2’s software renderer.

Specifically, the RenderBackend_Blit function. As it turns

out, the only time the opaque path was ever taken was when drawing stage

backgrounds. This is despite the fact that the vast majority of tiles on

the screen are opaque. I had discovered this earlier when testing how

performance would be if no transparent tiles were drawn at all, and only

the background showed up. Now, I had an idea for how to optimize

this.

Instead of assuming every tile is transparent, I could first check which tiles in a given texture need transparency and store the results. I quickly wrote a function to dump out uploaded textures to check my assumptions, and learned two things.

- Tiles are 16x16 pixels large. Further testing showed that larger objects are in fact drawn as multiple tiles, not big blocks.

- The top-left tile of a level graphics set is always completely black (i.e. transparent).

Just to quickly sanity check that second one, I added a quick bypass to the blit function that just skips rendering the top left tile for the level graphics texture. It made no visible difference except to performance, which improved significantly (by this point I was testing on my laptop with the framelimiter hacked out to see just how much of a margin I had). It turns out that for every tile on the screen that doesn’t have any visible tile, the game draws a completely empty tile. This may have worked fine with DirectX, but it made quite a bit of trouble for the software renderer.

Considering how much of the game is drawn as 16x16 tiles, I decided

that this optimization was only needed for those, and got to work.

RenderBackend_UploadSurface got modified to compute a set

of “cheap rectangles” for each upload. This goes through each 16x16 tile

in the texture and checks if the tile contains any completely black

pixels. If it does not, its starting X and Y texture coordinates

(combined into one 32-bit int) are stored into the surface’s “cheap

rectangles” set. RenderBackend_Blit got a piece of code

that checks if we’re drawing a 16x16 tile, and if we are, disables

transparency if the tile is found in the cheap rectangles set. I kept

the little snippet that skips the always empty tile immediately.

This change massively increased the game’s performance and gave an approximate 30 frames per second margin on the Sun Ultra. We had 30 extra frames per second to work with. I was extremely pleased with this and felt kind of silly for not implementing it earlier.

Making machines scream

Now that the game played nearly perfectly, save for the lack of audio

and the occasional screen flashing that the lack of double buffering

still caused, I finally got started on a serious attempt at doing the

audio. I immediately started discovering my past mistakes. First, I was

using the data fetched from /dev/audio via

ioctl completely wrong, from having either misread or

misremembered the how the structs looked.

Second, I realized that we weren’t getting into the main part that actually wrote out the sound, so I had a look and indeed the “end-of-file counter” was never being incremented. In the wait for a better solution, I commented out the code that waits for that condition.

Third, I realized that thread pausing just wasn’t gonna work here,

and removed it. Fourth, I discovered that for unknown reasons that still

elude me as of writing, using open and write

on /dev/audio just didn’t work right, at all. Nothing went

out except settings via ioctl. Switching to

fopen and fputc somehow made the speaker come

to life. I still had to set the ioctl settings, and I

didn’t yet know about fileno (which can get the file

descriptor number out of a FILE*), so I first opened

/dev/audio with open, set the settings, then

closed it. This will turn out to have consequences.

But at this point I was just happy to hear some audio come out of the speaker. It didn’t sound any good at all, it wasn’t even recognizable as Cave Story’s music, but it did make the all eardrums in the room move.

Making machines cough

Several problems were encountered, most of which very minor and due to a brief slip of the mind, so this section will only recap the interesting bits (it’s totally not because I have no Git history for this part because I only committed the working code).

The software audio mixer that’s being used generates its samples as

signed 16-bit samples stored in longs, which I originally

didn’t know how to handle correctly. A lot of back and forth there had

the speakers screaming like a cat that hasn’t been fed in 45 minutes,

even when it sounded kind of okay the sound was constantly clipping.

This problem combined with the mess that was dealing with outputting a

stereo signal to a mono output. Things were very wrong. Eventually,

after being prodded by others in the room, I simply copied how the other

backends handled the sample conversion, and that fixed the peaking

problems. The trick was the way the samples were clipped. I’ve forgotten

what the wrong way to do it was, but I was doing it. As for the

stereo/mono problem, I eventually just turned on stereo output and that

fixed that problem too… sort of.

With this combination of settings, stereo, 8-bit depth, 8KHz sampling rate, etc., the sound was coming out all wrong. I soon discovered (but didn’t yet quite internalize) that despite being set to 8-bit mode, and indeed outputting 8-bit sound on the speaker, the audio device wanted 16-bit samples in. So I was essentially skipping over every other sample when trying to output 8-bit sound in the way that made sense. So I changed the code to output 16-bit samples, but for some reason left the settings intact. That worked I guess.

What followed this was a lot of aimless flailing around trying to get the audio to actually work for more than the title screen music. Setting the delay between sound buffers to something lower than the playback rate would have the title screen music sound perfect, but got completely out of sync with the actual game. After a while, presumably the sound chip’s buffer would fill up and crash, or maybe start rejecting samples, though I’m not sure I ever saw this happen. Setting the delay too low would have it struggling to keep up with the game, constantly coughing and sputtering in between bursts of sound. Setting it to what was supposed to be the exact rate obviously didn’t work either, since the computer can’t be that precise.

Among the attempted solutions was one where the sound thread would

write out some samples, and then wait for a buffer underrun by watching

the audio system’s error counter. This didn’t work, and

even if it had it wouldn’t have been any good. Eventually, I decided to

go look for inspiration. I started reading the code for some other audio

libraries, such as SDL’s audio subsystem. Audio library authors are

mortal humans (or so they claim), so this had a decent chance of

succeeding.

After my reading (more like quick glances at function names to be

honest), I decided that what I should do is have a function that waits

until some number of samples have been consumed. With that I could just

blast out some audio, wait for some amount’s worth of samples to be

consumed, then blast out some more. I hacked this up using the sound

system’s samples counter, where it would have some amount

of samples it waited to have played before continuing.

This didn’t quite work. The concept seemed sound (pun intended only retroactively), but it would act like there was no speed limit, blasting out audio as fast as it could and getting desynced from the game. But this was still very close to correct, since the concept is obviously proven to work in other contexts, and the audio did sound right.

Making machines sing

So why did checking the number of samples played not work? It’s

because there’s no “samples played” counter. That’s right, the “samples

counter” counts the number of samples sent, not played. It’s

completely useless for playback. I would have to figure out that

“end-of-file counter”. Luckily, it turned out to actually be quite

simple. After switching to fopen and getting the file

descriptor integer from that, I tried doing a write again,

just like before, with NULL as the source buffer and

0 for the length, plus a fflush afterwards,

just to be sure. And would you believe it? It worked.

I overhauled the code a bit to make way for this feature that now

just sorta worked. A counter for the number of sample buffers written

out is added. The soundWait function waits until at least

one buffer more has been played back since when it was called. It’s also

given a return value, the number of sound buffers that have been played

back so far. The sample output function is changed to write an empty

buffer using write after every buffer of samples, as well

as increment the total written buffers counter.

For the main sound loop, we wait until a buffer has been played and save how many buffers have been played back. Then we make and write out new sound buffers until the amount written is at least three buffers ahead of the amount already played. Loop forever.

This way, if the sound output is lagging behind, it has a safe margin of error. Simply make and write more sound buffers until it’s caught up to and ahead of playback. This worked almost perfectly. Occasionally there were still buffer underruns, but they were mostly tolerable.

Making

machin—Alarm Clock (program exited)

When I say it worked, I mean it worked for usually a few minutes (it

varied) until the process suddenly died, leaving one lone message on its

terminal: Alarm Clock. This had started happening earlier

but I had dismissed it as probably being a sound buffer overrun crash.

At this point that was obviously not the case. Some searching lead to me

finding out that this is the message presented when a program fails to

handle a SIGALRM signal. I was fairly certain that nothing

should be sending that signal, so into GDB I went.

It took some doing to get GDB to stop on SIGALRM. By

default it will pass SIGALRM through to the program

unless there’s no handler, in which case it will silently

absorb it. After setting it to stop, I discovered something nobody wants

to have to deal with. A bug in libc.

usleep was used to implement Backend_Delay,

a function which the game uses to pass the time between frames and which

my audio code uses to wait for audio to play. And in my backtrace I now

saw that the SIGALRM that was crashing the game was coming

from inside usleep. This discovery made me not very happy.

Luckily, Hugo was there to dig up a copy of the source code to Solaris

2.6’s libc and managed to find the source to usleep. And

right there, in plain C, was the bug, as clear and obvious as it could

possibly be. A textbook race condition.

On Solaris (which seems to have derived its libc from BSD’s),

usleep is implemented using the UNIX timer and

sigsuspend. The timer counts down from a set time and when

it’s finished it sends the calling process a SIGALRM.

sigsuspend will, as the name implies, suspend the thread

until a signal arrives. Of course, the calling program may be using

SIGALRM for its own purposes, so to avoid interfering with

the program usleep first saves both the state of the timer

as well as SIGALRM’s signal handler, before resetting the

timer and setting SIGALRM’s handler to an empty function,

since all it wants SIGALRM for is waking up from

sigsuspend. When it wakes up, it restores these and

returns.

This all makes for a very simple implementation of

usleep that I’m sure some Berkeley student was very proud

of. Unfortunately, this Berkeley student never expected multi-threading

to exist, and the people implementing multi-threading just… forgot about

usleep? As you might be able to tell from the above

description of the code, if two threads are in usleep at

once and one wakes up before the other, the one that wakes up afterwards

is going to wake up into a process that has no SIGALRM

handler, meaning it will—Alarm Clock.

This is such a textbook race condition that I find it incredible that

it wasn’t caught and fixed. I can understand a user not knowing that

signals and their handlers are per-process and not per-thread, but

someone working on the C library needs to know this stuff. Once this had

been figured out, the fix was simple: add an empty handler to

SIGALRM. I did that, and Alarm Clock was no

more.

Tying up some loose ends

The game occasionally freezes now

So the game has stopped crashing to Alarm Clock. That’s

nice, but instead it’s taken to letting one of the two threads simply

freeze. I was puzzled by this for quite some time, especially since the

problem didn’t like to let itself happen when running through GDB, but

eventually I managed to discover that it was freezing inside

sigsuspend. Ugh, I bet that has a race condition too. I

decided to take some advice I had received previously when complaining

about usleep’s problems and just ditch it in favor of using

select as a sleep.

The function select is intended to be used to wait for

file descriptors to be ready. Say you’re waiting on a really slow

storage device, or perhaps a network socket, and you don’t want to stop

the entire program until it’s ready to be read from. select

lets you specify lists of file descriptors and a timeout, and it will

wait until either some of the file descriptors are ready, or until the

timeout has passed.

Now, select does apparently have a limitation that makes

it unsuitable for general use on modern systems, but I’m not interested

in its primary purpose. The timeout is specified as a

timeval, which consists of second and microsecond

components, and it turns out that if select is called with

all its lists being empty, it will simply wait for the timeout. Thanks

to select being a system call, this all occurs inside the

kernel meaning there’s hopefully a lower chance of

process/thread-confusion-induced race conditions. The usage of this

trick is very simple, here’s how I used it:

struct timeval timeout;

timeout.tv_sec = milliSeconds / 1000;

timeout.tv_usec = (milliSeconds * 1000) % 1000000;

select(0, NULL, NULL, NULL, &timeout);Not only was this approach free of random freezing caused by Sun

assuming nobody would ever use the thread features they provided, it

also seems to be more accurate than usleep was. It

seems like the game (and more importantly, the audio code) was delaying

for too long before this change. Most of the buffer underruns

that were left vanished just like that! The only ones left are the

occasional single-buffer miss during level loading, and those are barely

noticeable during gameplay.

Double buffering

So, the screen still flashes occasionally. That’s due to the game’s rendering overwriting the framebuffer that X is actively trying to draw to the screen. The fix for this was simple and I had simply been delaying it out of a combination of laziness and prioritizing other things. The solution is double buffering. Double buffering (or more precisely, double buffering with page flipping) basically works like this: you have two buffers and draw your graphics onto one of them. When the time comes to display, you swap the two buffers so that the one you were drawing to is saved somewhere while it’s being put on the display. You can then safely draw into the other buffer without worrying about clobbering the one that’s being shown.

Implementing this was rather simple, just a matter of adding a second

XShm image buffer, tweaking code and names to deal with there now being

two

of them, and adding the swapping logic to the Display

function.

Audio bit depth

As you may have guessed from the weird behavior of

/dev/audio from before (no not that weird behavior, no not

that one either), we’re already doing all the work of 16-bit audio, the

setting was just on 8 for whatever reason. I increased this to 16 and it

worked perfectly without any tweaks.

Conclusion

The port is missing a few features. The game’s resolution is stuck at 320x240 due to both performance and default system limits on SHM buffer sizes. This is problematic both due to the very small window size at normal Solaris resolutions, and due to the abysmally ugly font the game has for English at 320x240. The characters are merely 6 pixels wide, and the font doesn’t make the greatest use of that space, which makes some lowercase characters borderline unreadable. The Japanese font is quite readable though, so this doesn’t feel like a particularly hard limitation, but perhaps it is.

There’s no way to make config files directly on the Sun without poking bytes directly into a file. This is due to the normal CSE2 config program being written with OpenGL and Dear ImGui in mind, which I have good reason to suspect wouldn’t work well in this environment. I could have written a basic C program to mitigate this, but I didn’t and instead opted to provide a default config file directly in the repo with known working settings.

The port is not very general, and although a soundless version has

run on my modern Linux laptop several times, I can’t guarantee that

works since code kept getting changed back and forth between the

versions due to me being too lazy to look up the proper C macros to use

in ifdefs. There are also several rather ugly hacks

touching endianess, so I have no idea if the little endian version works

anymore.

Despite these shortcomings, I’m very satisfied with how the port turned out and am reasonably proud of it (that is to say, I don’t mind if people call my code here crap, but I feel good about having written it). The game runs at its full yet weird speed, the audio works as close to flawlessly as possible, and the experience is reasonably seamless. And… now I’m wondering if an OpenGL renderer could work… Nah, not worth figuring out.

The source code can be downloaded from this repo. Binaries are not provided.